Activity Report 2014

Project-Team STARS

Spatio-Temporal Activity Recognition Systems

RESEARCH CENTER

Sophia Antipolis -Méditerranée

THEME

Vision, perception and multimedia interpretation

Table of contents

1. Members ................................................................................ 1

2. Overall Objectives ........................................................................ 2

2.1.1. Research Themes 2

2.1.2. International and Industrial Cooperation 4

3. Research Program ........................................................................ 4

3.1. Introduction 4

3.2.2. Appearance Models and People Tracking 5

3.2.3. Learning Shape and Motion 6

3.3. Semantic Activity Recognition 6

3.3.1. Introduction 6

3.3.2. High Level Understanding 6

3.3.3. Learning for Activity Recognition 7

3.3.4. Activity Recognition and Discrete Event Systems 7

3.4. Software Engineering for Activity Recognition 7

3.4.1. Platform Architecture for Activity Recognition 7

3.4.2. Discrete Event Models of Activities 9

3.4.3. Model-Driven Engineering for Configuration and Control and Control of Video Surveillance systems 10

4. Application Domains .....................................................................10

4.1. Introduction 10

4.2. Video Analytics 10

4.3. Healthcare Monitoring 11

4.3.1. Topics 11

4.3.2. Ethical and Acceptability Issues 11

5. New Software and Platforms ............................................................. 11

5.1. SUP 11

5.1.1. Presentation 12

5.1.2. Improvements 13

5.2. ViSEvAl 13

5.3. Clem 16

6. New Results ............................................................................. 16

6.1. Highlights of the Year 16

6.2. Introduction 16

6.2.1. Perception for Activity Recognition 16

6.2.2. Semantic Activity Recognition 18

6.2.3. Software Engineering for Activity Recognition 19

6.3. People Detection for Crowded Scenes 19

6.3.1. Early Work 19

6.3.2. Current Work 20

6.4. Walking Speed Detection on a Treadmill using an RGB-D camera : experimentations and results 20

6.5. Head Detection Using RGB-D Camera 22

6.6. Video Segmentation and Multiple Object Tracking 24

6.7. Enforcing Monotonous Shape Growth or Shrinkage in Video Segmentation 26

6.8. Multi-label Image Segmentation with Partition Trees and Shape Prior 26

6.9. Automatic Tracker Selection and Parameter Tuning for Multi-object Tracking 27

6.10. An Approach to Improve Multi-object Tracker Quality Using Discriminative Appearances and Motion Model Descriptor 29

6.11. Person Re-identification by Pose Priors 30

6.12. Global Tracker : An Online Evaluation Framework to Improve Tracking Quality 31

6.13. Human Action Recognition in Videos 34

6.14. Action Recognition Using 3D Trajectories with Hierarchical Classifier 34

6.15. Action Recognition using Video Brownian Covariance Descriptor for Human 35

6.16. Towards Unsupervised Sudden Group Movement Discovery for Video Surveillance 36

6.17. Autonomous Monitoring for Securing European Ports 36

6.18. Video Understanding for Group Behavior Analysis 37

6.19. Evaluation of an Event Detection Framework for Older People Monitoring: from Minute to Hour-scale Monitoring and Patients Autonomy and Dementia Assessment 38

6.20. Uncertainty Modeling Framework for Constraint-based Event Detection in Vision Systems 39

6.21. Assisted Serious Game for Older People 41

6.22. Enhancing Pre-defined Event Models Using Unsupervised Learning 43

6.23. Using Dense Trajectories to Enhance Unsupervised Action Discovery 44

6.24. Abnormal Event Detection in Videos and Group Behavior Analysis 45

6.24.1. Abnormal Event Detection 46

6.24.2. Group Behavior Analysis 48

6.25. Model-Driven Engineering for Activity Recognition Systems 48

6.25.1. Feature Models 48

6.25.2. Configuration Adaptation at Run Time 49

6.26. Scenario Analysis Module 50

6.27. The Clem Workflow 50

6.28. Multiple Services for Device Adaptive Platform for Scenario Recognition 51

7. Bilateral Contracts and Grants with Industry ............................................. 52

8. Partnerships and Cooperations ........................................................... 53

8.1. National Initiatives 53

8.1.1. ANR 53

8.1.1.1. MOVEMENT 53

8.1.1.2. SafEE 53

8.1.2. Investment of Future 53

8.1.3. Large Scale Inria Initiative 54

8.1.4. Other Collaborations 54

8.2. European Initiatives 54

8.2.1.1. CENTAUR 54

8.2.1.2. PANORAMA 55

8.2.1.3. SUPPORT 55

8.2.1.4. Dem@Care 55

8.3. International Initiatives 56

8.3.1. Inria International Partners 56

8.3.1.1.1. Collaborations with Asia: 56

8.3.1.1.2. Collaboration with U.S.A.: 56

8.3.1.1.3. Collaboration with Europe: 56

8.3.2. Participation in Other International Programs 56

8.4. International Research Visitors 56

9. Dissemination ........................................................................... 58

9.1. Promoting Scientific Activities 58

9.1.1. Scientific Events Organisation 58

9.1.1.1. General chair, scientific chair 58

9.1.1.2. Member of the organizing committee 58

9.1.2. Scientific Events Selection 58

9.1.2.1. Member of the conference program committee 58

9.1.2.2. Reviewer 58

9.1.3. Journal 58

9.1.3.1. Member of the editorial board 58

9.1.3.2. Reviewer 58

9.1.4. Invited Talks 59

9.2. Teaching -Supervision -Juries 59

9.2.1. Teaching 59

9.2.2. Supervision 59

9.2.3. Juries 59

9.2.3.1. PhD, HDR 59

9.2.3.2. Expertise 60

9.3. Popularization 60

10. Bibliography ...........................................................................60

Project-Team STARS

Keywords: Perception, Semantics, Machine Learning, Software Engineering, Cognition

Creation of the Team: 2012 January 01, updated into Project-Team: 2013 January 01.

1. Members

Research Scientists

François Brémond [Team leader, Inria, Senior Researcher, HdR] Guillaume Charpiat [Inria, Researcher] Daniel Gaffé [Univ. Nice, Associate Professor] Sabine Moisan [Inria, Researcher, HdR] Annie Ressouche [Inria, Researcher] Monique Thonnat [Inria, Senior Researcher, HdR] Jean-Paul Rigault [External collaborator,Professor Univ. Nice]

Engineers

Slawomir Bak [Inria] Vasanth Bathrinarayanan [Inria, granted by FP7 DEM@CARE project] Carlos-Fernando Crispim Junior [Inria, granted by FP7 DEM@CARE project] Giuseppe Donatiello [Inria, granted by FP7 DEM@CARE project] Anaïs Ducoffe [NeoSensys start-up,from Oct 2014] Baptiste Fosty [Inria, granted by Caisse des Dépôts et Consignations] Rachid Guerchouche [NeoSensys start-up, from Oct 2014] Julien Gueytat [Inria, until Oct 2014] Anh-Tuan Nghiem [Inria, until Aug 2014, granted by Caisse des Dépôts et Consignations] Jacques Serlan [Inria] Malik Souded [Inria, granted by Min. de l’Economie] Sofia Zaidenberg [NeoSensys start-up, until Aug 2014] Bernard Boulay [NeoSensys start-up] Yves Pichon [NeoSensys start-up] Annunziato Polimeni [NeoSensys start-up, from Apr 2014]

PhD Students

Julien Badie [Inria] Piotr Tadeusz Bilinski [Inria] Carolina Garate Oporto [Inria] Michal Koperski [Inria] Thi Lan Anh Nguyen [Inria, from Dec 2014] Minh Khue Phan Tran [Cifre grant] Fnu Ratnesh Kumar [Inria] Auriane Gros [Nice Hospital University, since Sep 2014]

Post-Doctoral Fellows

Duc Phu Chau [Inria, granted by Min. de l’Economie] Serhan Cosar [Inria] Antitza Dantcheva [Inria, from Mar 2014] Salma Zouaoui-Elloumi [Inria, until Aug 2014]

Visiting Scientists

Vania Bogorny [Guest Professor, from Apr 2014] Luis Campos Alvares [Guest Professor, from May 2014] Jesse Hoey [Guest Professor, from Sep 2014]

Adlen Kerboua [PhD and associate professor (Algeria), from Oct 2014 until Nov 2014] Pavel Vacha [Research engineer (Honeywell Praha), from May 2014]

Administrative Assistants

Jane Desplanques [Inria] Nadežda Lacroix-Coste [Inria, from Oct 2014]

Others

Mohamed Bouatira [Inria, Internship, from Mar 2014 until Sep 2014] Javier Ortiz [Inria, pre PhD, from Sep 2014] Ines Sarray [Inria, from Apr 2014 until Oct 2014] Omar Abdalla [Inria, Internship, from Apr 2014 until Sep 2014] Jean Barboni [Inria, Internship, from May 2014 until Aug 2014] Agustín Caverzasi [Inria, Internship, until Feb 2014] Marine Chabran [Inria, Internship, from Sep 2014] Sara Elkerdawy [Inria, Internship, Mar 2014] Alvaro Gomez Uria Covella [Inria, Internship, from Mar 2014 until Dec 2014] Filipe Martins de Melo [Inria, Internship, from Apr 2014 until Sep 2014] Pablo Daniel Pusiol [Inria, Internship, from Apr 2014 until Sep 2014] Carola Strumia [Inria, Internship, from Oct 2014] Kouhua Zhou [Inria, Internship, from Jun 2014 until Sep 2014] Etienne Corvée [External Collaborator, Linkcare Services] Carolina Da Silva Gomes Crispim [Internship, from Jul 2014 until Sep 2014] Alexandre Derreumaux [CHU Nice] Auriane Gros [CHU Nice, from Oct 2014] Vaibhav Katiyar [Internship, until Feb 2014] Farhood Negin [Inria, Internship,from Apr 2014 until Nov 2014] Thi Lan Anh Nguyen [Inria, Internship, from Mar 2014 until Oct 2014] Ngoc Hai Pham [Inria,Internship, from May 2014 until Nov 2014] Silviu-Tudor Serban [Internship, until Jan 2014] Kartick Subramanian [Internship, until Aug 2014] Jean-Yves Tigli [External collaborator, Associate professor Univ. Nice] Philippe Robert [External Collaborator, Professor CHU Nice] Alexandra Konig [External Collaborator, PhD Maastrich University]

2. Overall Objectives

2.1. Presentation

2.1.1. Research Themes

STARS (Spatio-Temporal Activity Recognition Systems) is focused on the design of cognitive systems for Activity Recognition. We aim at endowing cognitive systems with perceptual capabilities to reason about an observed environment, to provide a variety of services to people living in this environment while preserving their privacy. In today world, a huge amount of new sensors and new hardware devices are currently available, addressing potentially new needs of the modern society. However the lack of automated processes (with no human interaction) able to extract a meaningful and accurate information (i.e. a correct understanding of the situation) has often generated frustrations among the society and especially among older people. Therefore, Stars objective is to propose novel autonomous systems for the real-time semantic interpretation of dynamic scenes observed by sensors. We study long-term spatio-temporal activities performed by several interacting agents such as human beings, animals and vehicles in the physical world. Such systems also raise fundamental software engineering problems to specify them as well as to adapt them at run time.

We propose new techniques at the frontier between computer vision, knowledge engineering, machine learning and software engineering. The major challenge in semantic interpretation of dynamic scenes is to bridge the gap between the task dependent interpretation of data and the flood of measures provided by sensors. The problems we address range from physical object detection, activity understanding, activity learning to vision system design and evaluation. The two principal classes of human activities we focus on, are assistance to older adults and video analytics.

A typical example of a complex activity is shown in Figure 1 and Figure 2 for a homecare application. In this example, the duration of the monitoring of an older person apartment could last several months. The activities involve interactions between the observed person and several pieces of equipment. The application goal is to recognize the everyday activities at home through formal activity models (as shown in Figure 3) and data captured by a network of sensors embedded in the apartment. Here typical services include an objective assessment of the frailty level of the observed person to be able to provide a more personalized care and to monitor the effectiveness of a prescribed therapy. The assessment of the frailty level is performed by an Activity Recognition System which transmits a textual report (containing only meta-data) to the general practitioner who follows the older person. Thanks to the recognized activities, the quality of life of the observed people can thus be improved and their personal information can be preserved.

Figure 1. Homecare monitoring: the set of sensors embedded in an apartment

Figure 2. Homecare monitoring: the different views of the apartment captured by 4 video cameras

Activity (PrepareMeal, PhysicalObjects( (p : Person), (z : Zone), (eq : Equipment)) Components( (s_inside : InsideKitchen(p, z))

(s_close : CloseToCountertop(p, eq)) (s_stand : PersonStandingInKitchen(p, z)))

Constraints( (z->Name = Kitchen) (eq->Name = Countertop) (s_close->Duration >= 100) (s_stand->Duration >= 100))

Annotation( AText("prepare meal") AType("not urgent")))

Figure 3. Homecare monitoring: example of an activity model describing a scenario related to the preparation of a meal with a high-level language

The ultimate goal is for cognitive systems to perceive and understand their environment to be able to provide appropriate services to a potential user. An important step is to propose a computational representation of people activities to adapt these services to them. Up to now, the most effective sensors have been video cameras due to the rich information they can provide on the observed environment. These sensors are currently perceived as intrusive ones. A key issue is to capture the pertinent raw data for adapting the services to the people while preserving their privacy. We plan to study different solutions including of course the local processing of the data without transmission of images and the utilisation of new compact sensors developed for interaction (also called RGB-Depth sensors, an example being the Kinect) or networks of small non visual sensors.

2.1.2. International and Industrial Cooperation

Our work has been applied in the context of more than 10 European projects such as COFRIEND, ADVISOR, SERKET, CARETAKER, VANAHEIM, SUPPORT, DEM@CARE, VICOMO. We had or have industrial collaborations in several domains: transportation (CCI Airport Toulouse Blagnac, SNCF, Inrets, Alstom, Ratp, GTT (Italy), Turin GTT (Italy)), banking (Crédit Agricole Bank Corporation, Eurotelis and Ciel), security (Thales R&T FR, Thales Security Syst, EADS, Sagem, Bertin, Alcatel, Keeneo), multimedia (Multitel (Belgium), Thales Communications, Idiap (Switzerland)), civil engineering (Centre Scientifique et Technique du Bâtiment (CSTB)), computer industry (BULL), software industry (AKKA), hardware industry (ST-Microelectronics) and health industry (Philips, Link Care Services, Vistek).

We have international cooperations with research centers such as Reading University (UK), ENSI Tunis (Tunisia), National Cheng Kung University, National Taiwan University (Taiwan), MICA (Vietnam), IPAL, I2R (Singapore), University of Southern California, University of South Florida, University of Maryland (USA).

3. Research Program

3.1. Introduction

Stars follows three main research directions: perception for activity recognition, semantic activity recognition, and software engineering for activity recognition. These three research directions are interleaved: the software engineering reserach direction provides new methodologies for building safe activity recognition systems and the perception and the semantic activity recognition directions provide new activity recognition techniques which are designed and validated for concrete video analytics and healthcare applications. Conversely, these concrete systems raise new software issues that enrich the software engineering research direction.

Transversally, we consider a new research axis in machine learning, combining a priori knowledge and learning techniques, to set up the various models of an activity recognition system. A major objective is to automate model building or model enrichment at the perception level and at the understanding level.

3.2. Perception for Activity Recognition

Participants: Guillaume Charpiat, François Brémond, Sabine Moisan, Monique Thonnat.

Computer Vision; Cognitive Systems; Learning; Activity Recognition.

3.2.1. Introduction

Our main goal in perception is to develop vision algorithms able to address the large variety of conditions characterizing real world scenes in terms of sensor conditions, hardware requirements, lighting conditions, physical objects, and application objectives. We have also several issues related to perception which combine machine learning and perception techniques: learning people appearance, parameters for system control and shape statistics.

3.2.2. Appearance Models and People Tracking

An important issue is to detect in real-time physical objects from perceptual features and predefined 3D models. It requires finding a good balance between efficient methods and precise spatio-temporal models. Many improvements and analysis need to be performed in order to tackle the large range of people detection scenarios.

Appearance models. In particular, we study the temporal variation of the features characterizing the appearance of a human. This task could be achieved by clustering potential candidates depending on their position and their reliability. This task can provide any people tracking algorithms with reliable features allowing for instance to (1) better track people or their body parts during occlusion, or to (2) model people appearance for re-identification purposes in mono and multi-camera networks, which is still an open issue. The underlying challenge of the person re-identification problem arises from significant differences in illumination, pose and camera parameters. The re-identification approaches have two aspects: (1) establishing correspondences between body parts and (2) generating signatures that are invariant to different color responses. As we have already several descriptors which are color invariant, we now focus more on aligning two people detections and on finding their corresponding body parts. Having detected body parts, the approach can handle pose variations. Further, different body parts might have different influence on finding the correct match among a whole gallery dataset. Thus, the re-identification approaches have to search for matching strategies. As the results of the re-identification are always given as the ranking list, re-identification focuses on learning to rank. "Learning to rank" is a type of machine learning problem, in which the goal is to automatically construct a ranking model from a training data.

Therefore, we work on information fusion to handle perceptual features coming from various sensors (several cameras covering a large scale area or heterogeneous sensors capturing more or less precise and rich information). New 3D RGB-D sensors are also investigated, to help in getting an accurate segmentation for specific scene conditions.

Long term tracking. For activity recognition we need robust and coherent object tracking over long periods of time (often several hours in videosurveillance and several days in healthcare). To guarantee the long term coherence of tracked objects, spatio-temporal reasoning is required. Modelling and managing the uncertainty of these processes is also an open issue. In Stars we propose to add a reasoning layer to a classical Bayesian framework modelling the uncertainty of the tracked objects. This reasoning layer can take into account the a priori knowledge of the scene for outlier elimination and long-term coherency checking.

Controling system parameters. Another research direction is to manage a library of video processing programs. We are building a perception library by selecting robust algorithms for feature extraction, by insuring they work efficiently with real time constraints and by formalizing their conditions of use within a program supervision model. In the case of video cameras, at least two problems are still open: robust image segmentation and meaningful feature extraction. For these issues, we are developing new learning techniques.

3.2.3. Learning Shape and Motion

Another approach, to improve jointly segmentation and tracking, is to consider videos as 3D volumetric data and to search for trajectories of points that are statistically coherent both spatially and temporally. This point of view enables new kinds of statistical segmentation criteria and ways to learn them.

We are also using the shape statistics developed in [5] for the segmentation of images or videos with shape prior, by learning local segmentation criteria that are suitable for parts of shapes. This unifies patchbased detection methods and active-contour-based segmentation methods in a single framework. These shape statistics can be used also for a fine classification of postures and gestures, in order to extract more precise information from videos for further activity recognition. In particular, the notion of shape dynamics has to be studied.

More generally, to improve segmentation quality and speed, different optimization tools such as graph-cuts can be used, extended or improved.

3.3. Semantic Activity Recognition

Participants: Guillaume Charpiat, François Brémond, Sabine Moisan, Monique Thonnat.

Activity Recognition, Scene Understanding, Computer Vision

3.3.1. Introduction

Semantic activity recognition is a complex process where information is abstracted through four levels: signal (e.g. pixel, sound), perceptual features, physical objects and activities. The signal and the feature levels are characterized by strong noise, ambiguous, corrupted and missing data. The whole process of scene understanding consists in analyzing this information to bring forth pertinent insight of the scene and its dynamics while handling the low level noise. Moreover, to obtain a semantic abstraction, building activity models is a crucial point. A still open issue consists in determining whether these models should be given a priori or learned. Another challenge consists in organizing this knowledge in order to capitalize experience, share it with others and update it along with experimentation. To face this challenge, tools in knowledge engineering such as machine learning or ontology are needed.

Thus we work along the following research axes: high level understanding (to recognize the activities of physical objects based on high level activity models), learning (how to learn the models needed for activity recognition) and activity recognition and discrete event systems.

3.3.2. High Level Understanding

A challenging research axis is to recognize subjective activities of physical objects (i.e. human beings, animals,

vehicles) based on a priori models and objective perceptual measures (e.g. robust and coherent object tracks). To reach this goal, we have defined original activity recognition algorithms and activity models. Activity recognition algorithms include the computation of spatio-temporal relationships between physical objects. All the possible relationships may correspond to activities of interest and all have to be explored in an efficient way. The variety of these activities, generally called video events, is huge and depends on their spatial and temporal granularity, on the number of physical objects involved in the events, and on the event complexity (number of components constituting the event).

Concerning the modelling of activities, we are working towards two directions: the uncertainty management for representing probability distributions and knowledge acquisition facilities based on ontological engineering techniques. For the first direction, we are investigating classical statistical techniques and logical approaches. For the second direction, we built a language for video event modelling and a visual concept ontology (including color, texture and spatial concepts) to be extended with temporal concepts (motion, trajectories, events ...) and other perceptual concepts (physiological sensor concepts ...).

3.3.3. Learning for Activity Recognition

Given the difficulty of building an activity recognition system with a priori knowledge for a new application, we study how machine learning techniques can automate building or completing models at the perception level and at the understanding level.

At the understanding level, we are learning primitive event detectors. This can be done for example by learning visual concept detectors using SVMs (Support Vector Machines) with perceptual feature samples. An open question is how far can we go in weakly supervised learning for each type of perceptual concept

(i.e. leveraging the human annotation task). A second direction is to learn typical composite event models for frequent activities using trajectory clustering or data mining techniques. We name composite event a particular combination of several primitive events.

3.3.4. Activity Recognition and Discrete Event Systems

The previous research axes are unavoidable to cope with the semantic interpretations. However they tend to let aside the pure event driven aspects of scenario recognition. These aspects have been studied for a long time at a theoretical level and led to methods and tools that may bring extra value to activity recognition, the most important being the possibility of formal analysis, verification and validation.

We have thus started to specify a formal model to define, analyze, simulate, and prove scenarios. This model deals with both absolute time (to be realistic and efficient in the analysis phase) and logical time (to benefit from well-known mathematical models providing re-usability, easy extension, and verification). Our purpose is to offer a generic tool to express and recognize activities associated with a concrete language to specify activities in the form of a set of scenarios with temporal constraints. The theoretical foundations and the tools being shared with Software Engineering aspects, they will be detailed in section 3.4.

The results of the research performed in perception and semantic activity recognition (first and second research directions) produce new techniques for scene understanding and contribute to specify the needs for new software architectures (third research direction).

3.4. Software Engineering for Activity Recognition

Participants: Sabine Moisan, Annie Ressouche, Jean-Paul Rigault, François Brémond.

Software Engineering, Generic Components, Knowledge-based Systems, Software Component Platform,

Object-oriented Frameworks, Software Reuse, Model-driven Engineering The aim of this research axis is to build general solutions and tools to develop systems dedicated to activity recognition. For this, we rely on state-of-the art Software Engineering practices to ensure both sound design and easy use, providing genericity, modularity, adaptability, reusability, extensibility, dependability, and maintainability.

This research requires theoretical studies combined with validation based on concrete experiments conducted in Stars. We work on the following three research axes: models (adapted to the activity recognition domain), platform architecture (to cope with deployment constraints and run time adaptation), and system verification (to generate dependable systems). For all these tasks we follow state of the art Software Engineering practices and, if needed, we attempt to set up new ones.

3.4.1. Platform Architecture for Activity Recognition

In the former project teams Orion and Pulsar, we have developed two platforms, one (VSIP), a library of real-time video understanding modules and another one, LAMA [14], a software platform enabling to design not only knowledge bases, but also inference engines, and additional tools. LAMA offers toolkits to build and to adapt all the software elements that compose a knowledge-based system.

Figure 4. Global Architecture of an Activity Recognition The grey areas contain software engineering support modules whereas the other modules correspond to software components (at Task and Component levels) or to generated systems (at Application level).

Figure 4 presents our conceptual vision for the architecture of an activity recognition platform. It consists of three levels:

• The Component Level, the lowest one, offers software components providing elementary operations and data for perception, understanding, and learning.

- –

- Perception components contain algorithms for sensor management, image and signal analysis, image and video processing (segmentation, tracking...), etc.

- –

- Understanding components provide the building blocks for Knowledge-based Systems: knowledge representation and management, elements for controlling inference engine strategies, etc.

- –

- Learning components implement different learning strategies, such as Support Vector

Machines (SVM), Case-based Learning (CBL), clustering, etc. An Activity Recognition system is likely to pick components from these three packages. Hence, tools must be provided to configure (select, assemble), simulate, verify the resulting component combination. Other support tools may help to generate task or application dedicated languages or graphic interfaces.

- The Task Level, the middle one, contains executable realizations of individual tasks that will collaborate in a particular final application. Of course, the code of these tasks is built on top of the components from the previous level. We have already identified several of these important tasks: Object Recognition, Tracking, Scenario Recognition... In the future, other tasks will probably enrich this level.

- For these tasks to nicely collaborate, communication and interaction facilities are needed. We shall also add MDE-enhanced tools for configuration and run-time adaptation.

- The Application Level integrates several of these tasks to build a system for a particular type of application, e.g., vandalism detection, patient monitoring, aircraft loading/unloading surveillance, etc.. Each system is parameterized to adapt to its local environment (number, type, location of sensors, scene geometry, visual parameters, number of objects of interest...). Thus configuration and deployment facilities are required.

The philosophy of this architecture is to offer at each level a balance between the widest possible genericity

and the maximum effective reusability, in particular at the code level. To cope with real application requirements, we shall also investigate distributed architecture, real time implementation, and user interfaces.

Concerning implementation issues, we shall use when possible existing open standard tools such as NuSMV for model-checking, Eclipse for graphic interfaces or model engineering support, Alloy for constraint representation and SAT solving for verification, etc. Note that, in Figure 4, some of the boxes can be naturally adapted from SUP existing elements (many perception and understanding components, program supervision, scenario recognition...) whereas others are to be developed, completely or partially (learning components, most support and configuration tools).

3.4.2. Discrete Event Models of Activities

As mentioned in the previous section (3.3) we have started to specify a formal model of scenario dealing with both absolute time and logical time. Our scenario and time models as well as the platform verification tools rely on a formal basis, namely the synchronous paradigm. To recognize scenarios, we consider activity descriptions as synchronous reactive systems and we apply general modelling methods to express scenario behaviour.

Activity recognition systems usually exhibit many safeness issues. From the software engineering point of view we only consider software security. Our previous work on verification and validation has to be pursued; in particular, we need to test its scalability and to develop associated tools. Model-checking is an appealing technique since it can be automatized and helps to produce a code that has been formally proved. Our verification method follows a compositional approach, a well-known way to cope with scalability problems in model-checking.

Moreover, recognizing real scenarios is not a purely deterministic process. Sensor performance, precision of image analysis, scenario descriptions may induce various kinds of uncertainty. While taking into account this uncertainty, we should still keep our model of time deterministic, modular, and formally verifiable. To formally describe probabilistic timed systems, the most popular approach involves probabilistic extension of timed automata. New model checking techniques can be used as verification means, but relying on model checking techniques is not sufficient. Model checking is a powerful tool to prove decidable properties but introducing uncertainty may lead to infinite state or even undecidable properties. Thus model checking validation has to be completed with non exhaustive methods such as abstract interpretation.

3.4.3. Model-Driven Engineering for Configuration and Control and Control of Video Surveillance systems

Model-driven engineering techniques can support the configuration and dynamic adaptation of video surveillance systems designed with our SUP activity recognition platform. The challenge is to cope with the many—functional as well as nonfunctional—causes of variability both in the video application specification and in the concrete SUP implementation. We have used feature models to define two models: a generic model of video surveillance applications and a model of configuration for SUP components and chains. Both of them express variability factors. Ultimately, we wish to automatically generate a SUP component assembly from an application specification, using models to represent transformations [56]. Our models are enriched with intra-and inter-models constraints. Inter-models constraints specify models to represent transformations. Feature models are appropriate to describe variants; they are simple enough for video surveillance experts to express their requirements. Yet, they are powerful enough to be liable to static analysis [75]. In particular, the constraints can be analysed as a SAT problem.

An additional challenge is to manage the possible run-time changes of implementation due to context variations (e.g., lighting conditions, changes in the reference scene, etc.). Video surveillance systems have to dynamically adapt to a changing environment. The use of models at run-time is a solution. We are defining adaptation rules corresponding to the dependency constraints between specification elements in one model and software variants in the other [55], [ 84 ], [78].

4. Application Domains

4.1. Introduction

While in our research the focus is to develop techniques, models and platforms that are generic and reusable, we also make effort in the development of real applications. The motivation is twofold. The first is to validate the new ideas and approaches we introduce. The second is to demonstrate how to build working systems for real applications of various domains based on the techniques and tools developed. Indeed, Stars focuses on two main domains: video analytics and healthcare monitoring.

4.2. Video Analytics

Our experience in video analytics [6], [ 1 ], [8] (also referred to as visual surveillance) is a strong basis which ensures both a precise view of the research topics to develop and a network of industrial partners ranging from end-users, integrators and software editors to provide data, objectives, evaluation and funding.

For instance, the Keeneo start-up was created in July 2005 for the industrialization and exploitation of Orion and Pulsar results in video analytics (VSIP library, which was a previous version of SUP). Keeneo has been bought by Digital Barriers in August 2011 and is now independent from Inria. However, Stars continues to maintain a close cooperation with Keeneo for impact analysis of SUP and for exploitation of new results.

Moreover new challenges are arising from the visual surveillance community. For instance, people detection and tracking in a crowded environment are still open issues despite the high competition on these topics. Also detecting abnormal activities may require to discover rare events from very large video data bases often characterized by noise or incomplete data.

4.3. Healthcare Monitoring

We have initiated a new strategic partnership (called CobTek) with Nice hospital [67], [ 85 ] (CHU Nice, Prof P. Robert) to start ambitious research activities dedicated to healthcare monitoring and to assistive technologies. These new studies address the analysis of more complex spatio-temporal activities (e.g. complex interactions, long term activities).

4.3.1. Topics

To achieve this objective, several topics need to be tackled. These topics can be summarized within two points: finer activity description and longitudinal experimentation. Finer activity description is needed for instance, to discriminate the activities (e.g. sitting, walking, eating) of Alzheimer patients from the ones of healthy older people. It is essential to be able to pre-diagnose dementia and to provide a better and more specialised care. Longer analysis is required when people monitoring aims at measuring the evolution of patient behavioral disorders. Setting up such long experimentation with dementia people has never been tried before but is necessary to have real-world validation. This is one of the challenge of the European FP7 project Dem@Care where several patient homes should be monitored over several months.

For this domain, a goal for Stars is to allow people with dementia to continue living in a self-sufficient manner in their own homes or residential centers, away from a hospital, as well as to allow clinicians and caregivers remotely proffer effective care and management. For all this to become possible, comprehensive monitoring of the daily life of the person with dementia is deemed necessary, since caregivers and clinicians will need a comprehensive view of the person’s daily activities, behavioural patterns, lifestyle, as well as changes in them, indicating the progression of their condition.

4.3.2. Ethical and Acceptability Issues

The development and ultimate use of novel assistive technologies by a vulnerable user group such as individuals with dementia, and the assessment methodologies planned by Stars are not free of ethical, or even legal concerns, even if many studies have shown how these Information and Communication Technologies (ICT) can be useful and well accepted by older people with or without impairments. Thus one goal of Stars team is to design the right technologies that can provide the appropriate information to the medical carers while preserving people privacy. Moreover, Stars will pay particular attention to ethical, acceptability, legal and privacy concerns that may arise, addressing them in a professional way following the corresponding established EU and national laws and regulations, especially when outside France. Now, Stars can benefit from the support of the COERLE (Comité Opérationnel d’Evaluation des Risques Légaux et Ethiques) to help it to respect ethical policies in its applications.

As presented in 3.1, Stars aims at designing cognitive vision systems with perceptual capabilities to monitor efficiently people activities. As a matter of fact, vision sensors can be seen as intrusive ones, even if no images are acquired or transmitted (only meta-data describing activities need to be collected). Therefore new communication paradigms and other sensors (e.g. accelerometers, RFID, and new sensors to come in the future) are also envisaged to provide the most appropriate services to the observed people, while preserving their privacy. To better understand ethical issues, Stars members are already involved in several ethical organizations. For instance, F. Bremond has been a member of the ODEGAM -“Commission Ethique et Droit” (a local association in Nice area for ethical issues related to older people) from 2010 to 2011 and a member of the French scientific council for the national seminar on “La maladie d’Alzheimer et les nouvelles technologies -Enjeux éthiques et questions de société” in 2011. This council has in particular proposed a chart and guidelines for conducting researches with dementia patients.

For addressing the acceptability issues, focus groups and HMI (Human Machine Interaction) experts, will be consulted on the most adequate range of mechanisms to interact and display information to older people.

5. New Software and Platforms

5.1. SUP

Figure 5. SUP workflow

5.1.1. Presentation

SUP is a Scene Understanding Software Platform (see Figure 5) written in C++ designed for analyzing video content . SUP is able to recognize events such as ’falling’, ’walking’ of a person. SUP divides the workflow of a video processing into several separated modules, such as acquisition, segmentation, up to activity recognition. Each module has a specific interface, and different plugins (corresponding to algorithms) can be implemented for a same module. We can easily build new analyzing systems thanks to this set of plugins. The order we can use those plugins and their parameters can be changed at run time and the result visualized on a dedicated GUI. This platform has many more advantages such as easy serialization to save and replay a scene, portability to Mac, Windows or Linux, and easy deployment to quickly setup an experimentation anywhere. SUP takes different kinds of input: RGB camera, depth sensor for online processing; or image/video files for offline processing.

This generic architecture is designed to facilitate:

- integration of new algorithms into SUP;

- iharing of the algorithms among the Stars team. Currently, 15 plugins are available, covering the

whole processing chain. Some plugins use the OpenCV library. Goals of SUP are twofold:

- From a video understanding point of view, to allow the Stars researchers sharing the implementation of their algorithms through this platform.

- From a software engineering point of view, to integrate the results of the dynamic management of vision applications when applying to video analytic.

The plugins cover the following research topics:

- algorithms : 2D/3D mobile object detection, camera calibration, reference image updating, 2D/3D mobile object classification, sensor fusion, 3D mobile object classification into physical objects (individual, group of individuals, crowd), posture detection, frame to frame tracking, long-term tracking of individuals, groups of people or crowd, global tacking, basic event detection (for example entering a zone, falling...), human behaviour recognition (for example vandalism, fighting,...) and event fusion; 2D & 3D visualisation of simulated temporal scenes and of real scene interpretation results; evaluation of object detection, tracking and event recognition; image acquisition (RGB and RGBD cameras) and storage; video processing supervision; data mining and knowledge discovery; image/video indexation and retrieval.

- languages : scenario description, empty 3D scene model description, video processing and understanding operator description;

- knowledge bases : scenario models and empty 3D scene models;

- learning techniques for event detection and human behaviour recognition;

5.1.2. Improvements

Currently, the OpenCV library is fully integrated with SUP. OpenCV provides standardized data types, a lot of video analysis algorithms and an easy access to OpenNI sensors such as the Kinect or the ASUS Xtion PRO LIVE. In order to supervise the GIT update progress of SUP, an evaluation script is launched automatically everyday. This script updates the latest version of SUP then compiles SUP core and SUP plugins. It executes the full processing chain (from image acquisition to activity recognition) on selected data-set samples. The obtained performance is compared with the one corresponding to the last version (i.e. day before). This script has the following objectives:

- Check daily the status of SUP and detect the compilation bugs if any.

- Supervise daily the SUP performance to detect any bugs leading to the decrease of SUP performance

and efficiency. The software is already widely disseminated among researchers, universities, and companies:

- PAL Inria partners using ROS PAL Gate as middleware

- Nice University (Informatique Signaux et Systèmes de Sophia), University of Paris Est Créteil (UPEC -LISSI-EA 3956)

- EHPAD Valrose, Institut Claude Pompidou

- European partners: Lulea University of Technology, Dublin City University,...

• Industrial partners: Toyota, LinkCareServices, Digital Barriers Updates and presentations of our framework can be found on our team website https://team.inria.fr/stars/

software . Detailed tips for users are given on our Wiki website http://wiki.inria.fr/stars and sources are hosted thanks to the Inria software developer team SED.

5.2. ViSEvAl

ViSEval is a software dedicated to the evaluation and visualization of video processing algorithm outputs. The evaluation of video processing algorithm results is an important step in video analysis research. In video processing, we identify 4 different tasks to evaluate: detection, classification and tracking of physical objects of interest and event recognition.

The proposed evaluation tool (ViSEvAl, visualization and evaluation) respects three important properties:

- To be able to visualize the algorithm results.

- To be able to visualize the metrics and evaluation results.

• To allow users to easily modify or add new metrics. The ViSEvAl tool is composed of two parts: a GUI to visualize results of the video processing algorithms and metrics results, and an evaluation program to evaluate automatically algorithm outputs on large amounts of data. An XML format is defined for the different input files (detected objects from one or several cameras, ground-truth and events). XSD files and associated classes are used to check, read and write automatically the different XML files. The design of the software is based on a system of interfaces-plugins. This architecture

allows the user to develop specific treatments according to her/his application (e.g. metrics). There are 6 user interfaces:

- The video interface defines the way to load the images in the interface. For instance the user can develop her/his plugin based on her/his own video format. The tool is delivered with a plugin to load JPEG image, and ASF video.

- The object filter selects which objects (e.g. objects far from the camera) are processed for the evaluation. The tool is delivered with 3 filters.

- The distance interface defines how the detected objects match the ground-truth objects based on their bounding box. The tool is delivered with 3 plugins comparing 2D bounding boxes and 3 plugins comparing 3D bounding boxes.

- The frame metric interface implements metrics (e.g. detection metric, classification metric, ...) which can be computed on each frame of the video. The tool is delivered with 5 frame metrics.

- The temporal metric interface implements metrics (e.g. tracking metric, ...) which are computed on the whole video sequence. The tool is delivered with 3 temporal metrics.

- The event metric interface implements metrics to evaluate the recognized events. The tool provides 4 metrics.

Figure 6. GUI of the ViSEvAl software

The GUI is composed of 3 different parts:

1. The visualization of results windows dedicated to result visualization (see Figure 6):

- –

- Window 1: the video window displays the current image and information about the detected and ground-truth objects (bounding-boxes, identifier, type,...).

- –

- Window 2: the 3D virtual scene displays a 3D view of the scene (3D avatars for the detected and ground-truth objects, context, ...).

- –

- Window 3: the temporal information about the detected and ground truth objects, and about the recognized and ground-truth events.

- –

- Window 4: the description part gives detailed information about the objects and the events,

Figure 7. The object window enables users to choose the object to display

Figure 8. The multi-view window

– Window 5: the metric part shows the evaluation results of the frame metrics.

- The object window enables the user to choose the object to be displayed (see Figure 7).

- The multi-view window displays the different points of view of the scene (see Figure 8).

The evaluation program saves, in a text file, the evaluation results of all the metrics for each frame (whenever it is appropriate), globally for all video sequences or for each object of the ground truth. The ViSEvAl software was tested and validated into the context of the Cofriend project through its partners

(Akka, ...). The tool is also used by IMRA, Nice hospital, Institute for Infocomm Research (Singapore), ... The software version 1.0 was delivered to APP (French Program Protection Agency) on August 2010. ViSEvAl is under GNU Affero General Public License AGPL (http://www.gnu.org/licenses/) since July 2011. The tool is available on the web page : http://www-sop.inria.fr/teams/pulsar/EvaluationTool/ViSEvAl_Description.html

5.3. Clem

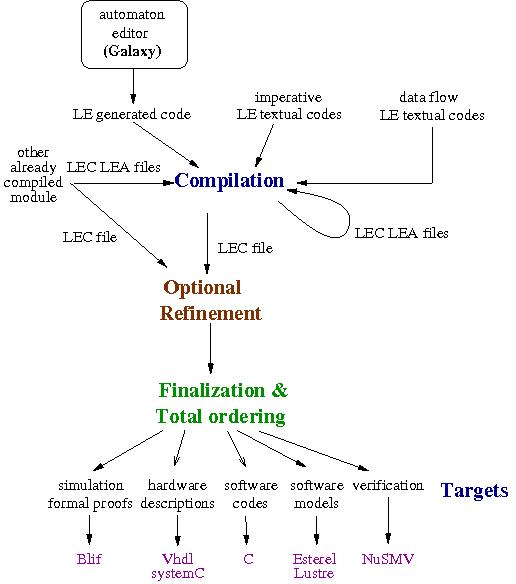

The Clem Toolkit [68](see Figure 9) is a set of tools devoted to design, simulate, verify and generate code for LE [18] [ 81 ] programs. LE is a synchronous language supporting a modular compilation. It also supports automata possibly designed with a dedicated graphical editor and implicit Mealy machine definition.

Each LE program is compiled later into lec and lea files. Then when we want to generate code for different backends, depending on their nature, we can either expand the lec code of programs in order to resolve all abstracted variables and get a single lec file, or we can keep the set of lec files where all the variables of the main program are defined. Then, the finalization will simplify the final equations and code is generated for simulation, safety proofs, hardware description or software code. Hardware description (Vhdl) and software code (C) are supplied for LE programs as well as simulation. Moreover, we also generate files to feed the NuSMV model checker [65] in order to perform validation of program behaviors. In 2014, LE supports data value for automata and CLEM is used in 2 research axes of the team (SAM and SynComp). CLEM is registered at the APP since May 2014.

The work on CLEM was published in [68], [ 69 ], [18], [ 19 ]. Web page: http://www-sop.inria.fr/teams/pulsar/projects/Clem/

6. New Results

6.1. Highlights of the Year

NeoSensys, a spin off of the Stars team which aims at commercializing video surveillance solutions for the retail domain, has been created in September 2014.

6.2. Introduction

This year Stars has proposed new algorithms related to its three main research axes : perception for activity recognition, semantic activity recognition and software engineering for activity recognition.

6.2.1. Perception for Activity Recognition

Participants: Julien Badie, Slawomir Bak, Piotr Bilinski, François Brémond, Bernard Boulay, Guillaume Charpiat, Duc Phu Chau, Etienne Corvée, Carolina Garate, Michal Koperski, Ratnesh Kumar, Filipe Martins, Malik Souded, Anh Tuan Nghiem, Sofia Zaidenberg, Monique Thonnat.

Figure 9. The Clem Toolkit

For perception, the main achievements are:

- Our new covariance descriptor has led to many publications and applications already. The work on this topic is now more about the precise use of the descriptor in varied applications than the design of new descriptors.

- The new action descriptors have led to finer gesture classification. As our target application is the detection of the Alzheimer syndrome from gesture analysis, which requires still finer descriptors, we will continue the work on this topic.

- The different shape priors developed (for shape growth enforcement, shape matching, articulated motion) have been formulated and designed so that efficient optimization tools could be used, leading to global optimality guarantees. These particular problems can thus be considered as solved, but there is still much work to be done on shape and related optimization, in particular to obtain shape statistics for human action recognition.

- The success obtained in the control of trackers is a proof of concept, but this work still needs to be

pursued to get more practical and to be applied on more real world videos. More precisely, the new results for perception for activity recognition are:

- People Detection for Crowded Scenes (6.3),

- Walking Speed Detection on a Treadmill using an RGB-D camera : experimentations and results (6.4),

- Head detection using RGB-D camera (6.5),

- Video Segmentation and Multiple Object Tracking (6.6),

- Enforcing Monotonous Shape Growth or Shrinkage in Video Segmentation (6.7),

- Multi-label Image Segmentation with Partition Trees and Shape Prior (6.8),

- Automatic Tracker Selection and Parameter Tuning for Multi-object Tracking (6.9),

- An Approach to Improve Multi-object Tracker Quality using Discriminative Appearances and Motion Model Descriptor (6.10),

- Person re-identification by pose priors(6.11),

- Global tracker : an online evaluation framework to improve tracking quality (6.12),

- Human action recognition in videos (6.13),

- Action Recognition using 3D Trajectories with Hierarchical Classifier (6.14),

- Action Recognition using Video Brownian Covariance Descriptor for Human (6.15),

- Towards Unsupervised Sudden Group Movement Discovery for Video Surveillance (6.16).

6.2.2. Semantic Activity Recognition

Participants: Vania Bogorny, Luis Campos Alvares, Vasanth Bathrinarayanan, Guillaume Charpiat, Duc Phu Chau, Serhan Cosar, Carlos F. Crispim Junior, Giuseppe Donatielo, Baptiste Fosty, Carolina Garate, Alvaro Gomez Uria Covella, Alexandra Konig, Farhood Negin, Anh-Tuan Nghiem, Philippe Robert, Carola Strumia.

For activity recognition, the main advances on challenging topics are:

- The utilization by clinicians for their everyday work of a first monitoring system able to recognize complex activities, to evaluate in real-time older people performance in an ecological room at Nice Hospital.

- The successful processing of over 80 older people videos and matching their performance for autonomy at home (e.g. walking efficiency) and cognitive disorders (e.g. realisations of executive tasks) with gold standard scales (e.g. NPI, MMSE). This research work contributes to the early detection of deteriorated health status and the early diagnosis of illness.

- The fusion of events coming from camera networks and heterogeneous sensors (e.g. RGB videos, Depth maps, audio, accelerometers).

- The management of the uncertainty of primitive events.

- The generation of event models in an unsupervised manner.

For this research axis, the contributions are :

- Autonomous Monitoring for Securing European Ports (6.17),

- Video Understanding for Group Behavior Analysis (6.18),

- Evaluation of an event detection framework for older people monitoring: from minute to hour-scale monitoring and Patients autonomy and dementia assessment (6.19),

- Uncertainty Modeling Framework for Constraint-based Event Detection in Vision Systems (6.20),

- Assisted Serious Game for older people (6.21),

- Enhancing Pre-defined Event Models using Unsupervised Learning (6.22),

- Using Dense Trajectories to Enhance Unsupervised Action Discovery (6.23),

- Abnormal Event Detection in Videos and Group Behavior Analysis (6.24).

6.2.3. Software Engineering for Activity Recognition

Participants: François Brémond, Daniel Gaffé, Sabine Moisan, Annie Ressouche, Jean-Paul Rigault, Omar Abdalla, Mohamed Bouatira, Ines Sarray, Luis-Emiliano Sanchez.

For the software engineering part, the main achievements are the Software Engineering methods and tools applied to video analysis. We have demonstrated that these approaches are appropriate and useful for video analysis systems:

- Run time adaptation using MDE is a promising approach. Our current prototype resorts to tools and technologies which were readily available. This made possible a proof of concepts.

- Introducing metrics in feature models was valuable to reduce the huge set of valid configurations after a dynamic context change and to provide a real time selection of an appropriate running configuration.

- The synchronous approach is well suited to describe reactive systems in a generic way, it has a well-established formal foundation allowing for automatic proofs, and it interfaces nicely with most model-checkers.

The contributions for this research axis are:

- Model-Driven Engineering for Activity Recognition Systems(6.25),

- Scenario Analysis Module (6.26),

- The Clem Workflow (6.27),

- Multiple Services for Device Adaptive Platform for Scenario Recognition (6.28).

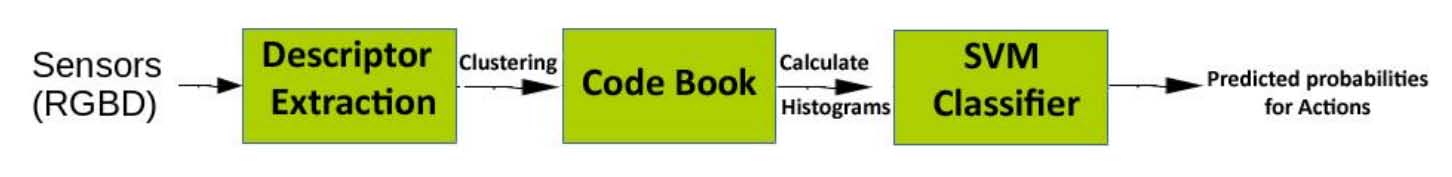

6.3. People Detection for Crowded Scenes Participants: Malik Souded, François Brémond. keywords: people detection, crowded scenes, features, boosting. This works aims at proposing an efficient people detection algorithm which can deal with crowded scenes.

6.3.1. Early Work

We have previously proposed an approach which optimizes state-of-the-art methods [Tuzel 2007, Yao 2008], based on training cascade of classifiers using LogitBoost algorithm on region covariance descriptors. This approach performs in real time and provides good detection performances in low to medium density scenes (see some examples in figure 10). However, this approach shows its limits on crowded scenes. Both detection accuracy and detection time are highly impacted in this case. The detection time increases dramatically due to the number of people in images, which forces the evaluation of many cascade levels, while the numerous partial occlusions highly decrease the detection rate (the considered detector is a full-body detector). To deal with these issues, we are working on a new approach.

6.3.2. Current Work

Our approach is based on training a cascade of classifiers using Boosting algorithms too, but on large sets of various features with several parameters for each of them (LBP, Haar-Like, HOG, Region Covariance Descriptor, etc.). The variety of features is motivated by three main reasons:

- Using fast features like LBP and Haar-like in the first levels of the cascade allows a fast rejection of a high part of negatives. The remaining ones will be rejected by a more sophisticated feature like Covariance Descriptor. This will highly decrease the detection time.

- Covariance Descriptor are not discriminative enough for very small regions. Our aim is to train the new detector on specific body parts, especially the upper one (shoulders and heads) to increase detection rate in highly crowded scenes (with a high rate of partial occlusions). Using a large set of various features allows the training system to select the ones which provide the best discriminative power for these regions.

- The possibility to combine several features to describe the same region, even by a simple concatena

tion, providing more discriminative power than using single features. Another part of this approach consists in the optimization of the detector at two levels:

- Optimizing the training process by first clustering both positive and negative training samples. This clustering allows to focus on the hard samples which are too close to the other class from a classification point of view, providing more accurate detectors.

- Iterative training of several detectors on randomly selected samples, and weighting of the training samples according to their classification confidence, which allows to improve the clustering process.

The evaluation of this approach is still in progress.

6.4. Walking Speed Detection on a Treadmill using an RGB-D camera : experimentations and results Participants: Baptiste Fosty, François Brémond. keywords: RGB-D camera analysis, walking speed, serious games Within the context of the developement of serious games for people suffering from Alzheimer disease (Az@Game project), we have developed an algorithm to compute the walking speed of a person on a treadmill. The goal is to use this speed inside the game to control the displacement of the avatar, and then for the patient to perform some physical as well as cognitive tasks. For the evaluation of the accuracy of the algorithm, we collected a video data set of healthy people walking on a motorized treadmill. Protocol. With the help of a specialist in the domain of physical activities, a protocol has been set up to cover the spectrum of the possible walking speeds and to prove the reproducibility of the results. This protocol consists in performing three times ten minutes of walking on the motorized treadmill, each attempt being itself divided in five times two minutes at the following speeds : 1.5 km/h, 2.5 km/h, 3.5 km/h, 4.5 km/h and 5.5 km/h. Participants, mostly people from the age of 18 to 60 without any physical disorder that could influence the gait, were asked to keep a natural gait and to follow the rotation of the treadmill.

Ground truth. The quantitative performances of the walking speed computation are evaluated by comparison with the speed of the walking person. The speed references are twofold :

- a theoretical value : the speed displayed by the treadmill, set up by the participant but imposed by the protocol (see Figure 12, red graph),

- a practical value : white marks have been painted on the treadmill to recompute the real speed of the rotation and so the walking speed (see Figure 12, green and blue graphs).

Results. The results presented herein are based on the videos of 36 participants who performed the protocol described above, with 17 males and 19 females, with an average age of 32.1±7.7 years, an average height of 171.1±9.1 cm and an average weight of 67.4±13.6 kg.

The table in figure 11 shows the statistical evaluation of the performances of the system. The average column shows that the accuracy of the system is better for the median speeds (around 4.5km/h). When the person is walking slower, the system overestimate the speed due to the wrongly detected steps whereas when faster, there is an underestimation because of missing the exact time when the distance between feet is maximum (framerate too low). A paper reporting this work is actually under writing process.

6.5. Head Detection Using RGB-D Camera

Participants: Marine Chabran, François Brémond. keywords: RGB-D camera analysis, head detection, serious games The goal of this work is to improve a head detection algorithm using RGB-D sensor (like a Kinect camera)

for action recognition as part of a study of autism. The psychologists want to compare the learning process of

children with autism syndrome depending on games (digital or physical toys). The algorithm described in [79] represents a head by its center position. It takes three steps to determine this point :

• Determine possible head center positions using a head model : inner circle radius=6 cm, outer circle radius=20 cm (Figure 13). A good inner point is a point on the inner circle verifying :

depthHeadCenter + 30cm > depthInnerPoint > depthHeadCenter − 30cm.

A good outer point is a point on the outer circle verifying :

depthHeadCenter < depthOuterPoint + 15cm.

- Merge close head centers separated by less than 4 pixels.

- Select final head center according to its score (calculated according to the number of good inner and outer points).

Figure 13. Each circle is divided in n parts (n=8). The points on the inner circle must have a similar depth with the center point, the points on the outer circle must be further than the center point compared to the camera

For now, it works well within video where people are close to the camera (about 1 meter) and without any

background just behind them (Figure 14). The problem is when the person is sitting and the head is ahead of the body (Figure 15) or close to a wall, the difference between head depth and outer circle depth becomes not sufficient (about 10 cm).

We have evaluated the performance of this algorithm with two data sets (Table 1). For Lenval Hospital data set, we have evaluated 2 series of 200 frames, for the Smart Home data set, we have evaluated 3 series of 300 frames (a total of 1300 heads).

Table 1. Performance of head detection and people detection on two different data sets.

| Videos | Head Detection (%) | People detection (%) |

| Lenval Hospital dataset (Figure 14) | 89.7 | 96.9 |

| Rest home dataset (Figure 15) | 62.8 | 85.3 |

6.6. Video Segmentation and Multiple Object Tracking

Participants: Ratnesh Kumar, Guillaume Charpiat, Monique Thonnat.

keywords:Fibers, Graph Partitioning, Message Passing, Iterative Conditional Modes, Video Segmentation, Video Inpainting This year we focussed on multiple object tracking, and writing of the thesis manuscript of Ratnesh (defense

on December 2014). The first contribution of this thesis is in the domain of video segmentation wherein the objective is to obtain a dense and coherent spatio-temporal segmentation. We propose joining both spatial and temporal aspects of a video into a single notion Fiber. A Fiber is a set of trajectories which are spatially connected by a mesh. Fibers are built by jointly assessing spatial and temporal aspects of the video. Compared to the state-of-the-art, a fiber based video segmentation presents advantages such as a natural spatio-temporal neighborhood accessor by a mesh, and temporal correspondences for most pixels in the video. Furthermore, this fiber-based segmentation is of quasi-linear complexity w.r.t. the number of pixels. The second contribution is in the realm of multiple object tracking. We proposed a tracking approach which utilizes cues from point tracks, kinematics of moving objects and global appearance of detections. Unification of all these cues is performed on a Conditional Random Field. Subsequently this model is optimized by a combination of message passing and an Iterated Conditional Modes (ICM) variant to infer object-trajectories. A third, minor, contribution relates to the development of suitable feature descriptor for appearance matching of persons. All of our proposed approaches achieve competitive and better results (both qualitatively and quantitatively) than state-of-the-art open source datasets.

This first part of the thesis was published at IEEE WACV at the beginning of this year [43], and the work on multiple object tracking was recently presented at Asian Conference on Computer Vision [44]

Sample visual results from our recent publication [44] can be seen in Figure 16.

6.7. Enforcing Monotonous Shape Growth or Shrinkage in Video Segmentation

Participant: Guillaume Charpiat [contact].

This work has been done in collaboration with Yuliya Tarabalka (Ayin team, Inria-SAM), Bjoern Menze (Technische Universität München, Germany), and Ludovic Brucker (NASA GSFC, USA) [http://www.nasa. gov].

keywords: Video segmentation, graph cut, shape analysis, shape growth The automatic segmentation of objects from video data is a difficult task, especially when image sequences are subject to low signal-to-noise ratio or low contrast between the intensities of neighboring structures. Such challenging data are acquired routinely, for example, in medical imaging or satellite remote sensing. While individual frames can be analyzed independently, temporal coherence in image sequences provides a lot of

information not available for a single image. In this work, we focused on segmenting shapes that grow or shrink monotonically in time, from sequences of extremely noisy images. We proposed a new method for the joint segmentation of monotonically growing or shrinking shapes in a

time sequence of images with low signal-to-noise ratio [32]. The task of segmenting the image time series is expressed as an optimization problem using the spatio-temporal graph of pixels, in which we are able to impose the constraint of shape growth or shrinkage by introducing unidirectional infinite-weight links connecting pixels at the same spatial locations in successive image frames. The globally-optimal solution is computed with graph-cuts. The performance of the proposed method was validated on three applications: segmentation of melting sea ice floes; of growing burned areas from time series of 2D satellite images; and of a growing brain tumor from sequences of 3D medical scans. In the latter application, we imposed an additional intersequences inclusion constraint by adding directed infinite-weight links between pixels of dependent image structures. Figure 17 shows a multi-year sea ice floe segmentation result. The proposed method proved to be robust to high noise and low contrast, and to cope well with missing data. Moreover, in practice, its complexity was linear in the number of images.

6.8. Multi-label Image Segmentation with Partition Trees and Shape Prior

Participant: Guillaume Charpiat [contact].

This work has been done in collaboration with Emmanuel Maggiori and Yuliya Tarabalka (Ayin team, Inria-SAM).

keywords: partition trees, multi-class segmentation, shape priors, graph cut The multi-label segmentation of images is one of the great challenges in computer vision. It consists in the simultaneous partitioning of an image into regions and the assignment of labels to each of the segments. The problem can be posed as the minimization of an energy with respect to a set of variables which can take one of multiple labels. Throughout the years, several efforts have been done in the design of algorithms that minimize such energies.

We propose a new framework for multi-label image segmentation with shape priors using a binary partition tree [50]. In the literature, such trees are used to represent hierarchical partitions of images, and are usually computed in a bottom-up manner based on color similarities, then processed to detect objects with a known shape prior. However, not considering shape priors during the construction phase induces mistakes in the later segmentation. This study proposes a method which uses both color distribution and shape priors to optimize the trees for image segmentation. The method consists in pruning and regrafting tree branches in order to minimize the energy of the best segmentation that can be extracted from the tree. Theoretical guarantees help reducing the search space and make the optimization efficient. Our experiments (see Figure 18) show that the optimization approach succeeds in incorporating shape information into multi-label segmentation, outperforming the state-of-the-art.

6.9. Automatic Tracker Selection and Parameter Tuning for Multi-object Tracking Participants: Duc Phu Chau, Slawomir Bak, François Brémond, Monique Thonnat. Keywords: object tracking, machine learning, tracker selection, parameter tuning Many approaches have been proposed to track mobile objects in a scene [87], [ 45 ]. However the quality of tracking algorithms always depends on video content such as the crowded level or lighting condition. The selection of a tracking algorithm for an unknown scene becomes a hard task. Even when the tracker has already been determined, there are still some issues (e.g. the determination of the best parameter values or the online estimation of the tracking reliability) for adapting online this tracker to the video content variation. In order to overcome these limitations, we propose the two following approaches.

The main idea of the first approach is to learn offline how to tune the tracker parameters to cope with the tracking context variations. The tracking context of a video sequence is defined as a set of six features: density of mobile objects, their occlusion level, their contrast with regard to the surrounding background, their contrast variance, their 2D area and their 2D area variance. In an offline phase, training video sequences are classified by clustering their contextual features. Each context cluster is then associated to satisfactory tracking parameters using tracking annotation associated to training videos. In the online control phase, once a context change is detected, the tracking parameters are tuned using the learned parameter values. This work has been published

in [30]. A limitation of the first approach is the need of annotated data for training. Therefore we have proposed a second approach without training data. In this approach, the proposed strategy combines an appearance tracker and a KLT tracker for each mobile object to obtain the best tracking performance (see figure 19). This helps to better adapt the tracking process to the spatial distribution of objects. Also, while the appearance-based tracker considers the object appearance, the KLT tracker takes into account the optical flow of pixels and their spatial neighbours. Therefore these two trackers can improve alternately the tracking performance.

The second approach has been experimented on three public video datasets. Figure 20 presents correct tracking results of this approach even with strong object occlusion in PETS 2009 dataset. Table 2 presents the evaluation results of the proposed approach, the KLT tracker, the appearance tracker and different trackers from the state of the art. While using separately the KLT tracker or the appearance tracker, the performance is lower than other approaches from the state of the art. The proposed approach by combining these two trackers improves significantly the tracking performance and obtains the best values for both metrics. This work has been published in [39].

Figure 20. Tracker result: Three persons of Ids 7535, 7228 and 4757 (marked by the cyan arrow) are occluded each other but their identities are kept correctly after occlusion.

Table 2. Tracking results on the PETS sequence S2.L1, camera view 1, sequence time 12.34. The best values are printed in bold.

| Method | MOTA | MOTP |

|---|---|---|

| Berclaz et al. [60] | 0.80 | 0.58 |

| Shitrit et al. [86] | 0.81 | 0.58 |

| KLT tracker | 0.41 | 0.76 |

| Appearance tracker | 0.62 | 0.63 |

| Proposed approach | 0.86 | 0.72 |

6.10. An Approach to Improve Multi-object Tracker Quality Using Discriminative Appearances and Motion Model Descriptor Participants: Thi Lan Anh Nguyen, Duc Phu Chau, François Brémond. Keywords: Tracklet fusion, Multi-object tracking Many recent approaches have been proposed to track multi-objects in a video. However, the quality of trackers is remarkably effected by video content. In the state of the art, several algorithms are proposed to handle this issue. The approaches in [39] and [64] propose methods which compute online or learn descriptor weights during tracking process. These algorithms adapt the tracking to the scene variations but are less effective when mis-detection occurs in a long period of time. Inversely, the algorithms in [59] and [58] can recover a long term mis-detection by fusing tracklets. However, the descriptor weights in these tracklet fusion algorithms are fixed in the whole video. Furthermore, above algorithms track objects based on object appearance which is not reliable enough when objects look similar to each other.

In order to overcome mentioned issues, the proposed approach brings three contributions: (1) appearance descriptors and motion model combination, (2) online discriminative descriptor weight computation and

(3) discriminative descriptors based tracklet fusion. In particular, the appearance of one object can be discriminative with other objects in this scene but can be similar with other objects in another scene. Therefore, tracking objects based on only object appearance is less effective. In order to improve tracker quality, assuming that objects move with constant velocity, this approach firstly combines a constant velocity model from [70] and other appearance descriptors. Continuously, discriminative descriptor weights are computed online to adapt the tracking to each video scene. The more a descriptor discriminates one tracklet over other tracklets, the higher its weight value is. Next, based on these descriptor weights, the similarity score between the target tracklet with its candidate is computed. In the last step, tracklets are fused to a long trajectory by Hungarian algorithm with the optimization of global similarity scores.

The proposed approach gets results of tracker in [63] as input and is tested on challenge datasets. This approach achieves comparable results with other trackers from the state of the art. Figure 1 shows that the tracklet keeps its ID even when occlusion occurs. Table 1 shows the better performance of this approach compared to other trackers from the state of the art.

Table 3. Tracking results on datasets: TUD-Stadtmitte and TUD-crossing. The best values are printed in bold

| Dataset | Method | MT(%) | PT(%) | ML(%) |

| TUD-Stadtmitte TUD-Stadtmitte TUD-Stadtmitte TUD-Stadtmitte TUD-Stadtmitte | [57] [30] [71] [95] Ours | 60.0 70.0 70.0 70.0 70.0 | 30.0 10.0 30.0 30.0 30.0 | 10.0 20.0 0.0 0.0 0.0 |

| TUD-Crossing TUD-Crossing | [89] Ours | 53.8 53.8 | 38.4 46.2 | 7.8 0.0 |

6.11. Person Re-identification by Pose Priors Participants: Slawomir Bak, Sofia Zaidenberg, Bernard Boulay, Filipe Martins, Francois Brémond. keywords: re-identification, pose estimation, metric learning Human appearance registration, alignment and pose estimation